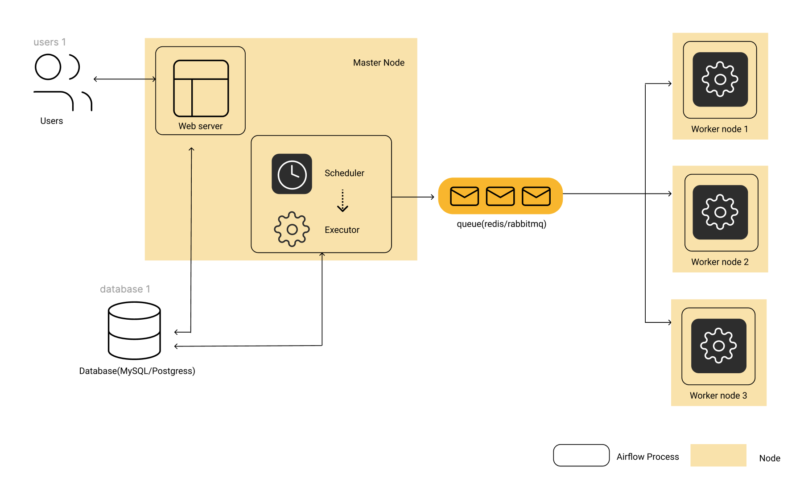

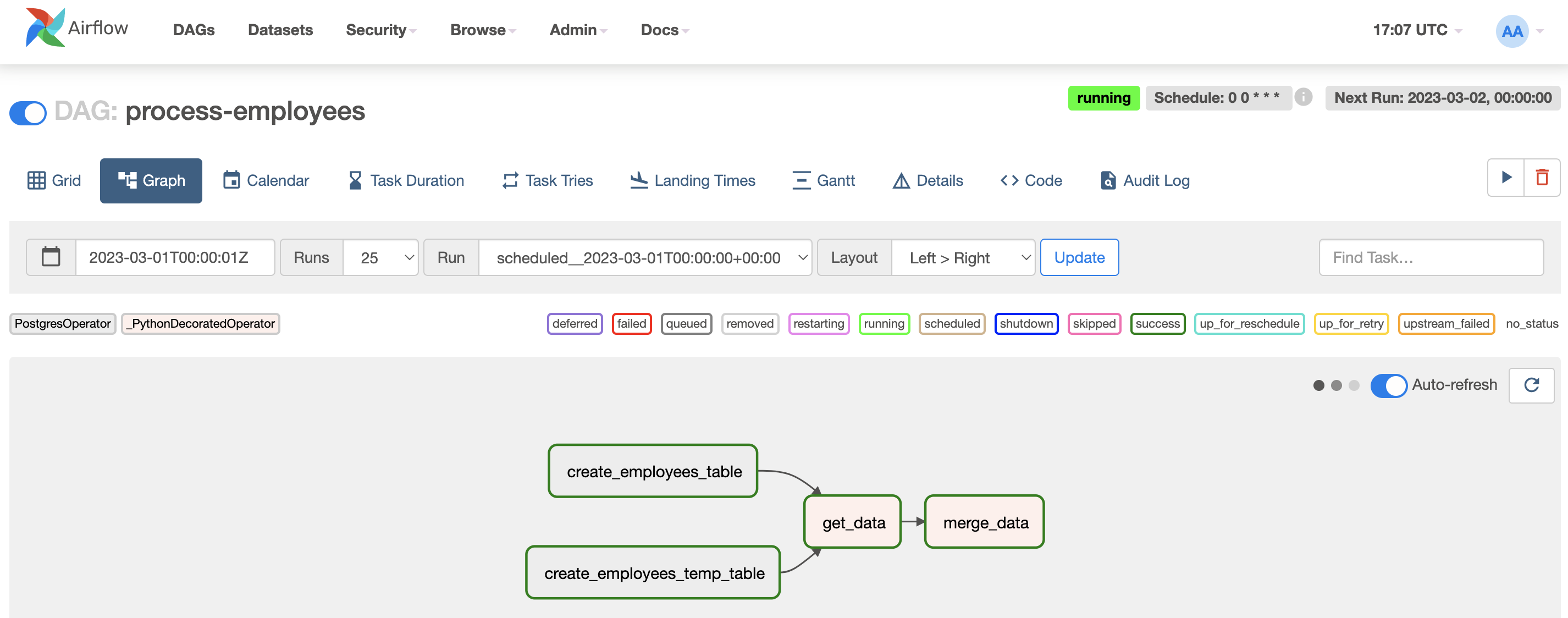

The same behaviour is observed between Gunicorn 19.9.0 (which the OP uses) and 19.10.0 (the version suggested by the official constraints file), against Airflow master (but I could not find any relevant differences in 1.10.10). I expect the webserver to handle signals, even when the startup process hasn't completed yet. You can see the ^C not leading to a shutdown of the process. KubernetesExecutor requires a non-sqlite database in the backend. The scheduler itself does not necessarily need to be running on Kubernetes, but does need access to a Kubernetes cluster. KubernetesExecutor runs as a process in the Airflow Scheduler. ^C^C^C^C^C^C^C^C^C^C^C^C^C^C Can't connect to ('0.0.0.0', 8080) The Kubernetes executor runs each task instance in its own pod on a Kubernetes cluster. Run airflow webserver: now you have a webserver running. As of yet there is no resolution to this problem._ /| |_ /_ _/_ /_ _ /_ _ \_ | /| / / Start the Airflow web server and scheduler: Run the following. Starting from nothing, you run airflow scheduler: now you have a scheduler running. It is unclear whether this directly causes the crashes that some people are seeing or whether this is just an annoying cosmetic log. There is some activity with others saying they see the same issue. I found the following issue in the Airflow Jira: How do I resolve the database connection errors? Is there a setting to increase the number of database connections, if so where is it? Do I need to handle the workers differently?Įven with no workers running, starting the webserver and scheduler fresh, when the scheduler fills up the airflow pools the DB connection warning starts to appear. I am using Python 3.5, Airflow 1.8, Celery 4.1.0, and RabbitMQ 3.5.7 as the worker : It looks like I am having a problem on RabbitMQ, but I cannot figure out the reason. I run three commands, airflow webserver, airflow scheduler, and airflow worker, so there should only be one worker and I don't see why that would overload the database.

I'm using CeleryExecutor and I'm thinking that maybe the number of workers is overloading the database connections. I've tried to increase the SQL Alchemy pool size setting in airflow.cfg but that had no effect # The SqlAlchemy pool size is the maximum number of database connections in the pool. Reconnecting.Įventually, I'll also get this error FATAL: remaining connection slots are reserved for non-replication superuser connections Establish a connection to /airflow/airflow.db through some DBMS (Im using. If youre running Airflow for the first time in a new. Once its running, use a similar command to run the Scheduler: airflow. The following warning shows up where it didn't before WARNING - DB connection invalidated. Use the meltano schedule command to create pipeline schedules in your project, to be run by Airflow. Docker configuration for Airflow airflow-scheduler - The scheduler monitors.

You can do this in option sqlalchemyconn in section. We use airflow helm charts to deploy airflow. In PythonOperator or BashOperator tasks, use. We have configured a webserver a scheduler and a VMSS for workers in airflow. Airflow uses SQLAlchemy to connect to the database, which requires you to configure the Database URL. Or you can check the process listening to 8793 as: lsof i:8793 and if you don't need that process you kill. The Airflow scheduler modifies entries in the Airflow metadata database, which stores configurations such as variables, connections, user information, roles, and policies.

By default, Cube operators use cubedefault as an Airflow connection name. After running this, you should be able to run kubectl get pods and see your Postgres POD running. I changed the port to 8795 and the command airflow worker worked. is a popular open-source workflow scheduler commonly used for data orchestration. Once you have this saved YAML file postgres-airflow.yaml, and have your kubectl connected to your Kubernetes cluster, run this command to deploy the Postgres instance. The console also indicates that tasks are being scheduled but if I check the database nothing is ever being written. Connections store credentials and are used by tasks for secure access to external systems. In the docker container running Airflow server a process was already running on the port 8793 which the workerlogserverport settings in airflow.cfg refers by default. A bunch of these errors show up in a row. I am upgrading our Airflow instance from 1.9 to 1.10.3 and whenever the scheduler runs now I get a warning that the database connection has been invalidated and it's trying to reconnect.

0 kommentar(er)

0 kommentar(er)